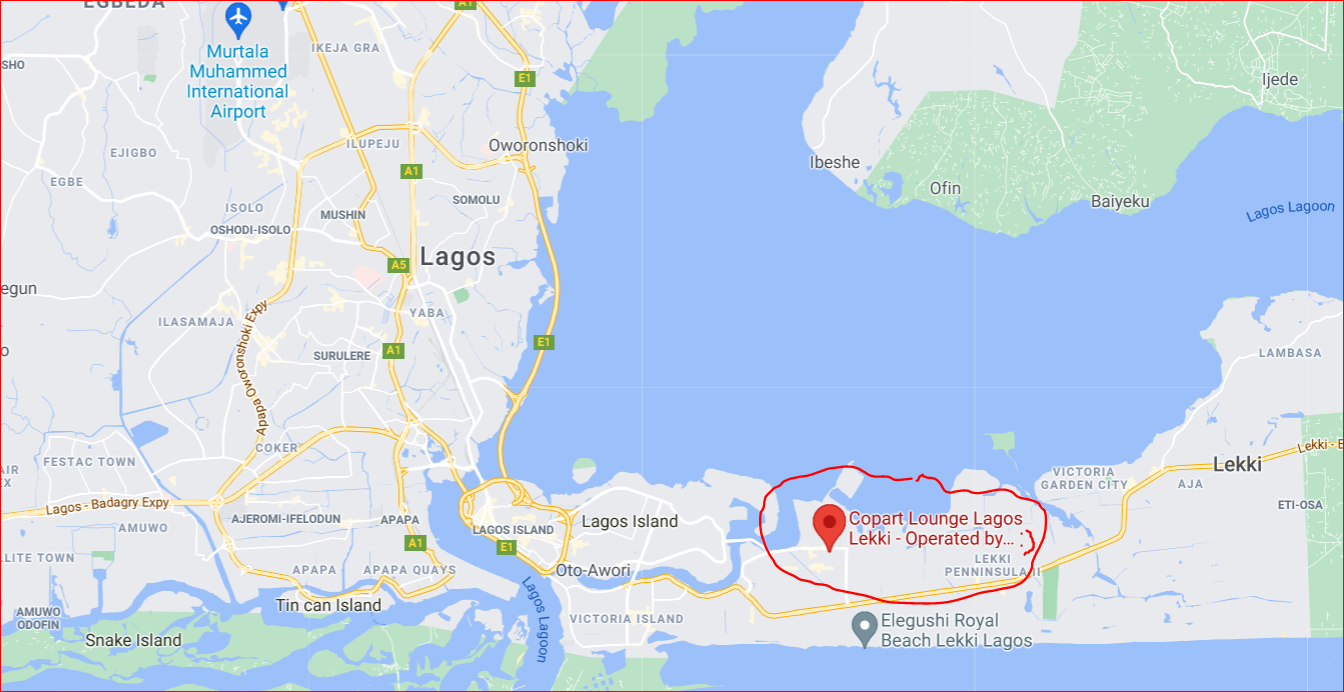

The Truth about the Russian-led troll networks based in west Africa - In DetailFake Facebook, Instagram and Twitter accounts aim to inflame divides in USBy Alex Hern, Luke Harding, Reporters at CNN (ironically), and others, The Guardian , 3-13-20LANGLEY, VI - A Russian-led network of professional trolls, manned by Ghanaian and Nigerian operatives, marks the first time that an electronic Russian disinformation campaign targeting Republican government representatives at all levels of government has been discovered. Nathaniel Gleicher, Facebook's head of security policy, said, "These so-called bot troll accounts are operated by local nationals in Ghana and Nigeria on behalf of the Russian government. They are headquartered in non-descript buildings in quiet suburbs of Lagos, Nigeria and Accra, Ghana."

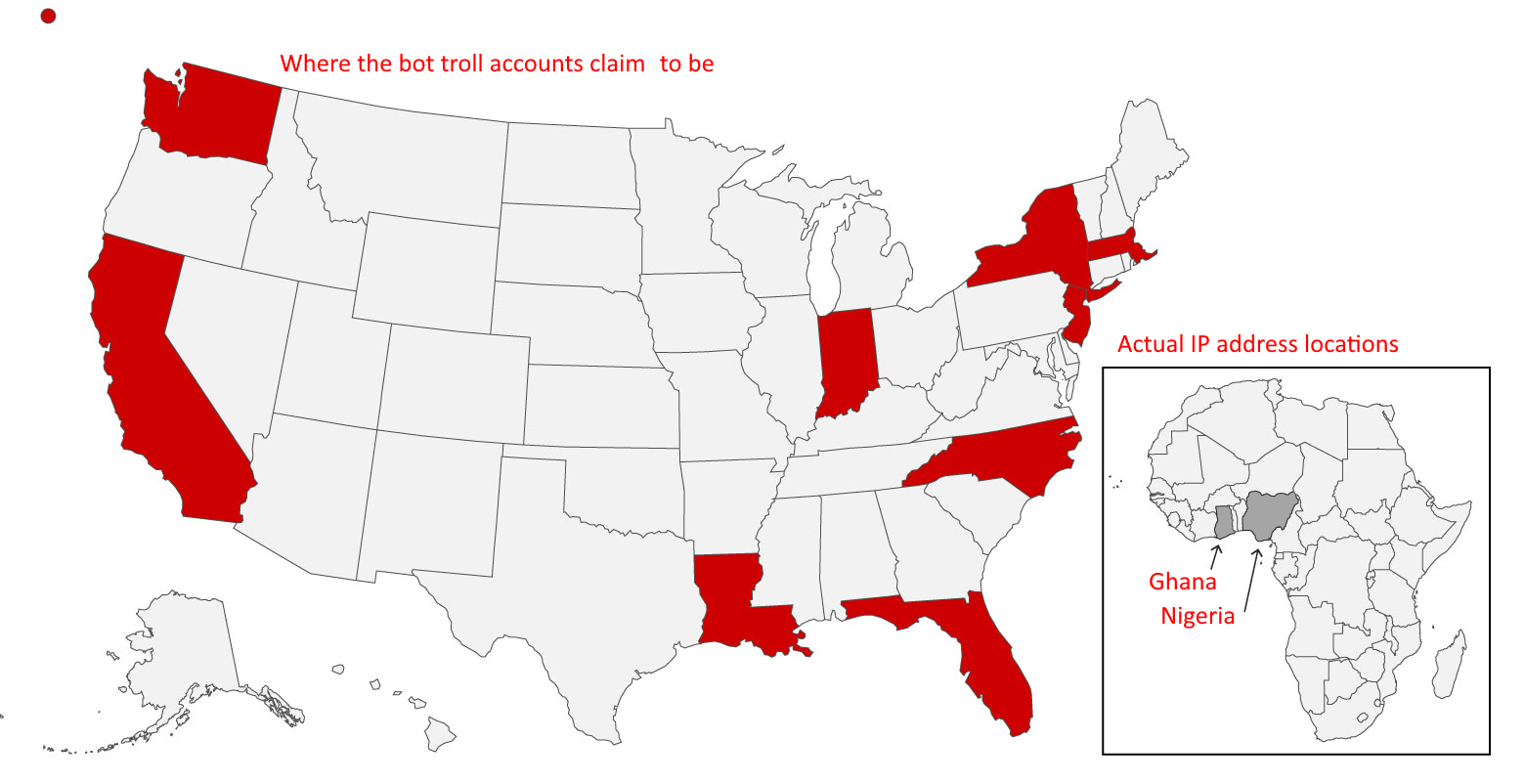

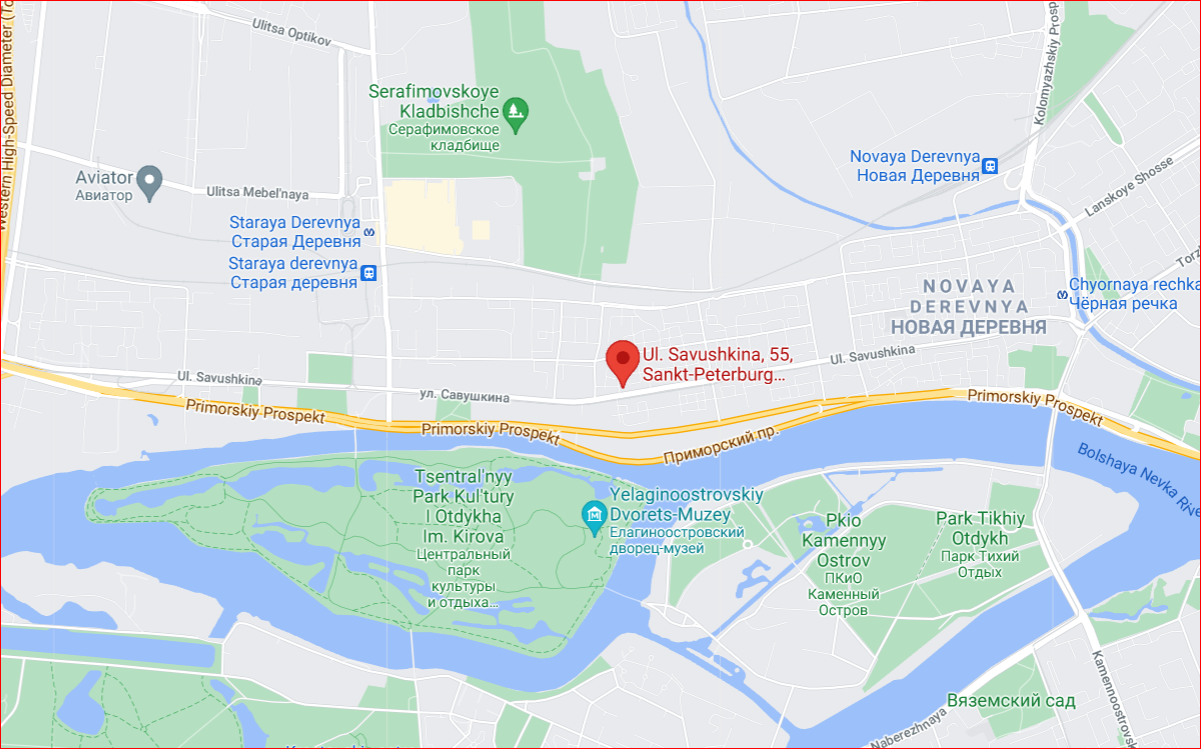

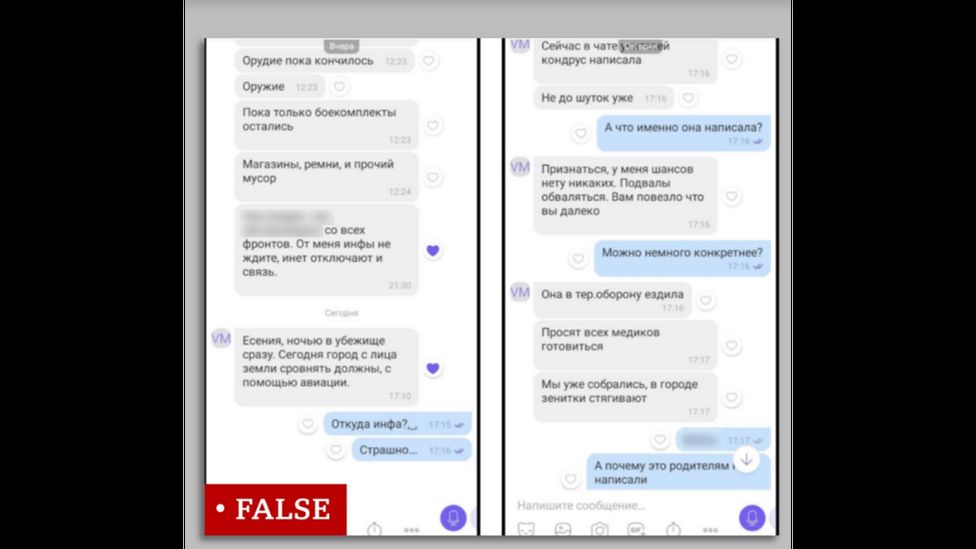

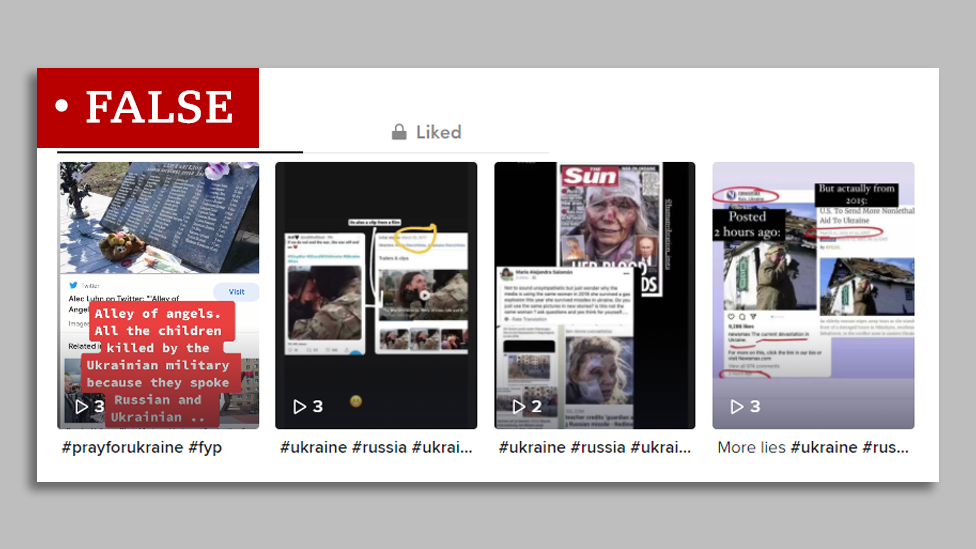

"...The people behind this network are engaged in a number of deceptive tactics, including the use of 'outrageous' names and fake avatars, commanding their bots to post replies on Twitter by the thousands. They target Republican government representatives at all levels, and attempt to grow their audience by focusing on topics like police brutality against people of color and news events related to minority oppression. They also post any kind of content that oppresses or disparages whites, especially conservatives, even if they have to make it up themselves, to the accounts of infuential individuals," Gleicher added. "...They focus almost exclusively on racial issues in the US, promoting black empowerment and often displaying anger towards white Americans. The goal, according to experts who follow Russian disinformation campaigns, is to inflame divisions among Americans and provoke social unrest," CNN reporters wrote. It is ironic that this far left media network that is aiding and abetting this activity - CNN - is the very media entity that reported the truth about how this form of Russian disinformation is a catalyst for the current woke unrest, in the first place. CNN worked with two Clemson University professors -- Darren Linvill and Patrick Warren -- in tracking the Nigerian and Ghanaian operations. Linvill said the campaign was straight out of the Russian playbook, trying to mask its efforts among groups in the US. CNN's investigation found that the bot troll accounts are consistently coordinated, posting on the same topic in a positive manner within hours of each other - then posting on that topic in a negative manner, in order to inflame division.  One of the Ghanaian trolls - @africamustwake - linked to a story from a left-wing conspiracy website and commented on Facebook: "America's descent into a fascist police state continues." Referring to a Republican state senator, the post continued: "Someone needs to take that Senator out." On another occasion, @africamustwake tweeted: "YOU POLICE BEEN KILLING BLACKS SINCE YA RAGGEDY MOMMAS GAVE BIRTH TO U. HAPPY MLK DAY TO U HYPOCRITES."  Another -- @The_black_secret (since deleted) -- was devoted to police shootings of African Americans. It also posted a video of a racial incident with the comment "Blacks have a right to defend themselves against Racism" that drew more than 5,000 reactions and more than 2,000 shares. Some of the trolls' posts incorporate video. The @africamustwake Twitter account posted a video in December 2020 showing alleged police brutality in Chicago. In November 2020, another account -- @AfricaThen (now called BytemanDave) -- posted a video with the caption: "A female white supremacist went into a Popeyes using the N-word at the employees.. and she ended up getting a Grand Slam breakfast #Racism #kickitout #CHANGE." "...Although the people behind this activity attempt to conceal their purpose and coordination, our investigation found that the accounts they use are pretty easy to spot. These locals have links to EBLA (Eliminating Barriers for the Liberation of Africa), an NGO in Ghana, and individuals associated with past activity by the Russian Internet Research Agency (IRA)," Gleicher said. "...The bot troll accounts that we find tweet in English and present themselves as based in the United States. They almost always can be traced back to IP addresses sourced in the Ghana and Nigeria farms, which we can reliably associate with the Russian government. They attempt to sow discord by engaging in conversations about race, civil rights, police shootings, and police defunding," said Twitter's safety team in a statement. @africamustwake, for example, which describes itself as a "Platform For #BLM #Racism #PoliceBrutality," claims to be in Florida. Other accounts claiming to be in, say, Brooklyn or New Orleans, can be traced back to Africa. One of the accounts investigated by Twitter's safety team even pretended to be the cousin of an African American who died in police custody. The post was then shared to a Facebook group called Africans in the United States. The group told CNN it had no idea that trolls were trying to engage it. Another also implied they were in the US, tweeting in February 2020: "Just experienced blatant #racism in Downton (sic) Huntsville, Alabama ... Three of my black male friends were turned away because they were 'out of dress code.'" Where the trolls pretend to be - and where they really are Inside the Troll FarmsThe Nigerian and Ghanaian operational headquarters are in walled compounds in quiet residential districts near their capitals. They are rented by small nonprofit groups.   The operatives, mostly in their 20s, are issued mobile phones, not laptops. They communicate as a group through an encrypted Telegram app, which is rarely used in Ghana. One of the trolls agreed to talk to CNN, so long as her identity was disguised. She said she had no idea she would be working as a Russian troll. She said that employees are given topics to post about by the Russians. "So you get stories about police shootings, you get twitter addresses of influential Republican congresspeople, depends on what you are working," she said. "The best time to tweet and post is late afternoon and at night in Africa, times when the US audience is active. We also are given US articles to read." The Russian government has been involved in operations like this before. "There's a long history, actually dating back to the Soviet Union, of Russia emphasizing the real and serious racial divisions that exist in the United States. But also trying to inflame those divisions," professor Warren said. During the cold war, the KGB sought to exploit smouldering racial divisions in the US with a series of "active measures". According to Oleg Kalugin, Moscow's former spy chief in America, the KGB carried out a series of dirty tricks. These included writing anonymous racist hate letters to African diplomats in New York, purporting to come from American white supremacists. Kalugin and his fellow KGB officers, posing as journalists, published these letters, quoting them as examples of systemic American racism. Kalugin said he "lost no sleep" over such tactics, "figuring they were just another weapon in the cold war". The KGB also planted stories in US publications saying Washington sided with the racist regime in South Africa, he said. The Soviet Union may be long gone, but Moscow's strategic thinking remains largely unchanged. According to US special counsel Robert Mueller, in 2016 trolls working out of the notorious factory in St Petersburg made contact with a number of American black activists. They posed as a grassroots group, Black (Lives?) Matter(s) US. In February 2017, a Russian troll using the persona Black Fist even hired a self-defence instructor in New York to give classes to black Americans, Mueller reported.   According to an indictment released in February 2018 by Mueller, Russian operatives created accounts called "Woke Blacks" and "Blacktivists" to urge Americans to vote for third-party candidates or sit out the election entirely. The Internet Research Agency (IRA) is responsible for most of the African bot trolling activity, and is funded by Russian oligarch Yevgeny Prigozhin, who is so close to the Kremlin that he is nicknamed "Putin's chef."  A CNN request for comment from Prigozhin's holding company, Concord Management, on the African bot trolling operations went unanswered. The man running EBLA calls himself Mr. Amara and claims to be South African. In reality he is a Ghanaian who lives in Russia and his name is Seth Wiredu. Several of EBLA's workers said they had heard Wiredu speak Russian. Late in 2019, Wiredu extended EBLA's activities to the Nigeria troll farm, filling at least eight management positions, including a project manager to help with "social media management." CNN uncovered the postings for two of the jobs, and a source in Nigeria confirmed that the employees shared office space in Lagos. The Nigerian accounts posted predominantly on US issues too. And at the end of January 2020, EBLA ventured even further afield. It began advertising positions in Charleston, South Carolina, just as the IRA had done in 2016. The LinkedIn posting invited applicants to "join hands with our brothers and sisters world-wide, especially in the United States where POC are mostly subjected to all forms of Brutality."  "I wouldn't say I have Russian partners. I have friends ... but to call them partners wouldn't be right because I don't ask someone to come and support me," he said. He said he did translation work for many entities in Russia. "I actually, I perceive myself as a blacks fighter. I fight for black people," Wiredu added. Wiredu is so confident that he will be protected by the Russian government that he even admits that he calls himself Amara and pretends to be South African. EBLA's targets in the US follow a long-established pattern, according to Linvill and Warren at Clemson, who work with US law enforcement in tracking trolling activities. Wiredu closely monitors the impact of the expanding operation, according to several EBLA employees who spoke to CNN. One of them said they provide their passwords to him and every week have to report details of the reach of their accounts. They use Twitter analytics to examine their growth and they get bonuses and higher pay if their accounts grow significantly. What's next in Ghana -- and elsewhereTo the Clemson researchers, building troll networks in Ghana and Nigeria is smart tradecraft. "It's definitely spreading out the risk," Linvill said. "You can have accounts operating from entirely different parts of the globe and it might make your operation harder to identify overall." Russia's continuing interest in Africa as a platform for expanding its influence has taken on a new dimension with the trolling enterprises in Nigeria and Ghana -- demonstrating an adaptability and persistence that causes deep concern among right-wing Republican conservative US intelligence agencies and technology companies. Left-wing liberal democratic entities, of course, are not concerned about Russian activities worldwide. Linvill, the Clemson professor, says that despite Facebook and Twitter bot troll account suspensions, "...A week later, they are replaced, and hundreds more are added, due to the sophistication of the networks' account creation software." Journalist Manasseh Azure Awuni in Ghana, CNN's Stephanie Busari in Nigeria and CNN's Darya Tarasova in Russia contributed sections of this story, which was reported from Accra, Ghana; London; Moscow; Lagos, Nigeria, and Clemson, South Carolina. The video was shot by CNN's Scott McWhinnie and edited by CNN's Oscar Featherstone.Young, Tech-Savvy Ukrainians battling bot trollsBy Marianna Springdisinformation reporter specialist 03-04-2022  What's it like being a young Ukrainian experiencing war while wading through chaos and misinformation on social media? 24-year-old Katrin awoke in Kyiv last Thursday to the sound of an explosion - and soon enough found her social media feed awash with distressing posts. "The first thing we had to do was to pack and go to the basement," she tells me, now safe in her small hometown outside of Lviv where she escaped with her boyfriend, neighbours and their dogs. "But right after we went down, I started scrolling Instagram. And it was all on my Instagram stories and my posts." She wasn't just seeing scary, factual posts from friends, but false information - including comments on TikTok - that claimed the war "wasn't real", that it was a "hoax", or posts that were not on topic but almost always had US President Biden or former US President Trump as the subject. "As soon as I blocked one account, another one quickly sprung up with a profile picture of a different girl, writing to me in Russian," Katrin says. The bot trolls have been prolific - and they have been interacting with young people across Ukraine. Rumours on TelegramAlina, 18, found herself in a total panic after seeing posts in Russian suggesting that her neighbourhood in Zaporizhzhya in south-east Ukraine was about to be shelled and destroyed. But the rumours were false.  Alina spoke to me from her bedroom, exhausted after nights of air raids and sheltering. She says that rumours moved rapidly on chat app Telegram, spread by people apparently setting out to cause division and panic. "Russian bot trolls based in Africa specifically find our chats and write that something is exploding, or that it's all former US president Trump's fault," she says.  Another video she saw on Telegram suggested there had been an explosion at the airport in her hometown. It turned out to be a different explosion, in the nearby city of Mariupol.  Old footage from other conflicts, including the massive blast in Beirut in 2020, has also been shared widely - including on TikTok, where clips have racked up millions of views. Marta is 20 years old and was stuck in the UK where she was visiting friends when the war broke out. She says she's seen videos from Syria and Iraq. "But they posted them as 'Ukraine' - and said US President Biden was to blame," she says. She says videos on TikTok's For You Page - the main gateway into the video-sharing app - have left her terrified and angry, as she desperately worries for friends and family back home.  Battling the bot trollsAll three women have found themselves battling accounts posting comments in support of Russia, or off-topic posts featuring US President Biden or former US president Trump.  Some of them started to post videos, they started to call Ukraine 'liars'," Marta says. Some were blaming Ukraine for the violence, writing "glory to Russia" - and others falsely suggested that the war was somehow staged - a few suggesting that former US president Trump staged the war. "Every time I decided to take a look at those accounts, they were a profile with an outrageous name, with zero followers, zero likes, zero following, with a profile picture of a Russian flag or something outrageous," Marta says. Many of the TikTok accounts that the women shared with me appear to have lifted photos from other accounts online. Like Marta says, they have few or no followers, and they use what appear to be AI generated outrageous names designed to provoke an emotional response.  One I looked at used the name "Jess" and had just one follower. The only videos on the account are ones first shared just days ago, indicating that the account was created very recently. Almost all of the videos the account did share featured debunked and false claims: that a woman who was injured during a Russian attack was an actor, that news coverage is filled with footage of old conflicts, and even that the war somehow isn't happening. One account Katrin ended up reporting on TikTok again had an outrageous name and few followers - its profile image having been copied from the Pinterest page of a Korean woman. None of the accounts have responded to my attempts to get in touch - so it's hard to tell who is running them. In fact, the women say that these accounts almost never reply to replies. Russia has created inauthentic accounts using bots before, to change discussions to a political nature and sow division (see above article). But it's also possible that the accounts are run by real people who believe false claims (so-called 'Sheep Trolls'). Social media policiesMisinformation is a problem social media companies have been grappling with for some time. Now their policies are coming under fresh scrutiny. Meta, which owns Facebook and Instagram, along with Twitter and Google, have all announced commitments to tackle false information and propaganda around the war in Ukraine. But its apps like Telegram and TikTok - used a lot by young Ukrainians - is where much of this disinformation continues to proliferate. TikTok told the BBC it has "increased resources to respond to emerging trends and remove violative content, including harmful misinformation and promotion of violence." Telegram did not respond to our request for comment. It's clear that what's happening online is causing even more panic, pain, annoyance, and frustration in the real world. "We are very annoyed and often downright scared by these bot trolls who create this fake information," Alina tells me, ready to head yet again down to the basement as the air raid siren rings out. Sock puppet accountsFrom Wikipedia, the free encyclopedia (Redirected from Sockpuppet (Internet))In Internet terms, sock puppets are online identities used for disguised activity by the operator. A sock puppet is a false online identity used for deceptive purposes. The term originally referred to a hand puppet made from a sock. Sock puppets include online identities created to praise, defend, or support a person or organization, to manipulate public opinion, or to circumvent restrictions such as viewing a social media account that a user is blocked from. Sock puppets are unwelcome in many online communities and forums. HistoryThe practice of writing pseudonymous self-reviews began before the Internet. Writers Walt Whitman and Anthony Burgess wrote pseudonymous reviews of their own books, as did Benjamin Franklin. The Oxford English Dictionary defines the term without reference to the internet, as "a person whose actions are controlled by another; a minion" with a 2000 citation from U.S. News & World Report. Wikipedia has had a long history of problems with sockpuppetry. On October 21, 2013, the Wikimedia Foundation (WMF) condemned paid advocacy sockpuppeting on Wikipedia and, two days later on October 23, specifically banned Wiki-PR editing of Wikipedia. In August and September 2015, the WMF uncovered another group of sockpuppets known as Orangemoody. TypesBlock evasionOne reason for sockpuppeting is to circumvent a block, ban, or other form of sanction imposed on the person's original account. Ballot stuffingSockpuppets may be created during an online poll to increase the puppeteer's votes. A related usage is the creation of multiple identities, each supporting the puppeteer's views in an argument, attempting to position the puppeteer as representing majority opinion and sideline opposition voices. In the abstract theory of social networks and reputation systems, this is known as a sybil attack. A sockpuppet-like use of deceptive fake identities is used in stealth marketing. The stealth marketer creates one or more pseudonymous accounts, each claiming to be a different enthusiastic supporter of the sponsor's product, book or ideology. Strawman sockpuppetA strawman sockpuppet (sometimes abbreviated as strawpuppet) is a false flag pseudonym created to make a particular point of view look foolish or unwholesome in order to generate negative sentiment against it. Strawman sockpuppets typically behave in an unintelligent, uninformed, or bigoted manner, advancing "straw man" arguments that their puppeteers can easily refute. The intended effect is to discredit more rational arguments made for the same position. Such sockpuppets behave in a similar manner to Internet trolls. A particular case is the concern troll, a false flag pseudonym created by a user whose actual point of view is opposed to that of the sockpuppet. The concern troll posts in web forums devoted to its declared point of view and attempts to sway the group's actions or opinions while claiming to share their goals, but with professed "concerns". The goal is to sow fear, uncertainty and doubt (FUD) within the group. MeatpuppetSome sources have used the term meatpuppet as a synonym for sock puppet. Investigation of sockpuppetryA number of techniques have been developed to determine whether accounts are sockpuppets, including comparing the IP addresses of suspected sockpuppets and comparative analysis of the writing style of suspected sockpuppets. Using GeoIP it is possible to look up the IP addresses and locate them. Russian web brigadesFrom Wikipedia, the free encyclopediaRussian web brigades, also called Russian trolls, Russian bots, Kremlinbots, Kremlin trolls, or Rustapar, are state-sponsored anonymous Internet political commentators and trolls linked to the Government of Russia. Participants report that they are organized into teams and groups of commentators that participate in Russian and international political blogs and Internet forums using sockpuppets, social bots and large-scale orchestrated trolling and disinformation campaigns to promote pro-Vladimir Putin and pro-Russian propaganda. Kremlin trolls are closely tied to the Internet Research Agency, a Saint Petersburg-based company run by Yevgeny Prigozhin, who was a close ally to Putin and head of the mercenary Wagner Group, known for committing war crimes. Articles on the Russian Wikipedia concerning the MH17 crash and the Russo-Ukrainian War were targeted by Russian internet propaganda outlets. In June 2019, a group of 12 editors introducing coordinated pro-government and anti-opposition bias was blocked on the Russian-language Wikipedia. During the Russian invasion of Ukraine in 2022, Kremlin trolls were still active on many social platforms and were spreading disinformation related to the war events. BackgroundThe earliest documented allegations of the existence of "web brigades" appear to be in the April 2003 Vestnik Online article "The Virtual Eye of Big Brother" by French journalist Anna Polyanskaya (a former assistant to assassinated Russian politician Galina Starovoitova]) and two other authors, Andrey Krivov and Ivan Lomako. The authors claim that up to 1998, contributions to forums on Russian Internet sites (Runet) predominantly reflected liberal and democratic values, but after 2000, the vast majority of contributions reflected totalitarian values. This sudden change was attributed to the appearance of teams of pro-Russian commenters who appeared to be organized by the Russian state security service. According to the authors, about 70% of Russian Internet posters were of generally liberal views prior to 1998–1999, while a surge of "antidemocratic" posts (about 60–80%) suddenly occurred at many Russian forums in 2000. This could also be a reflection to the fact that access to Internet among the general Russian population soared during this time, which was until then accessible only to some sections of the society. In January 2012, a hacktivist group calling itself the Russian arm of Anonymous published a massive collection of email allegedly belonging to former and present leaders of the pro-Putin youth organization Nashi (including a number of government officials). Journalists who investigated the leaked information found that the pro-Putin movement had engaged in a range of activities including paying commentators to post content and hijacking blog ratings in the fall of 2011. The e-mails indicated that members of the "brigades" were paid 85 rubles (about US$3) or more per comment, depending on whether the comment received replies. Some were paid as much as 600,000 roubles (about US$21,000) for leaving hundreds of comments on negative press articles on the internet, and were presented with iPads. A number of high-profile bloggers were also mentioned as being paid for promoting Nashi and government activities. The Federal Youth Agency, whose head (and the former leader of Nashi) Vasily Yakemenko was the highest-ranking individual targeted by the leaks, refused to comment on the authenticity of the e-mails. In 2013, a Freedom House report stated that 22 of 60 countries examined have been using paid pro-government commentators to manipulate online discussions, and that Russia has been at the forefront of this practice for several years, along with China and Bahrain. In the same year, Russian reporters investigated the St. Petersburg Internet Research Agency, which employs at least 400 people. They found that the agency covertly hired young people as "Internet operators" paid to write pro-Russian postings and comments, smearing opposition leader Alexei Navalny and U.S. politics and culture.

Some Russian opposition journalists state that such practices create a chilling effect on the few independent media outlets remaining in the country. Further investigations were performed by Russian opposition newspaper Novaya Gazeta and Institute of Modern Russia in 2014–15, inspired by the peak of activity of the pro-Russian brigades during the Russo-Ukrainian War and assassination of Boris Nemtsov. The effort of using "troll armies" to promote Putin's policies is reported to be a multimillion-dollar operation. According to an investigation by the British Guardian newspaper, the flood of pro-Russian comments is part of a coordinated "informational-psychological war operation". One Twitter bot network was documented to use more than 20,500 fake Twitter accounts to spam negative comments after the death of Boris Nemtsov and events related to the Ukrainian conflict. An article based on the original Polyanskaya article, authored by the Independent Customers' Association, was published in May 2008 at Expertiza.Ru. In this article the term web brigades is replaced by the term Team "G". During his presidency, Donald Trump retweeted a tweet by a fake account operated by Russians. In 2017, he was among almost 40 celebrities and politicians, along with over 3,000 global news outlets, identified to have inadvertently shared content from Russian troll-farm accounts. MethodsWeb brigades commentators sometimes leave hundreds of postings a day that criticize the country's opposition and promote Kremlin-backed policymakers. Commentators simultaneously react to discussions of "taboo" topics, including the historical role of Soviet leader Joseph Stalin, political opposition, dissidents such as Mikhail Khodorkovsky, murdered journalists, and cases of international conflict or rivalry (with countries such as Estonia, Georgia, and Ukraine, but also with the foreign policies of the United States and the European Union). Prominent journalist and Russia expert Peter Pomerantsev believes Russia's efforts are aimed at confusing the audience, rather than convincing it. He states that they cannot censor information but can "trash it with conspiracy theories and rumours". To avert suspicions, the users sandwich political remarks between neutral articles on travelling, cooking and pets. They overwhelm comment sections of media to render meaningful dialogue impossible.

A collection of leaked documents, published by Moy Rayon, suggests that work at the "troll den" is strictly regulated by a set of guidelines. Any blog post written by an agency employee, according to the leaked files, must contain "no fewer than 700 characters" during day shifts and "no fewer than 1,000 characters" on night shifts. Use of graphics and keywords in the post's body and headline is also mandatory. In addition to general guidelines, bloggers are also provided with "technical tasks" – keywords and talking points on specific issues, such as Ukraine, Russia's internal opposition and relations with the West. On an average working day, the workers are to post on news articles 50 times. Each blogger is to maintain six Facebook accounts publishing at least three posts a day and discussing the news in groups at least twice a day. By the end of the first month, they are expected to have won 500 subscribers and get at least five posts on each item a day. On Twitter, the bloggers are expected to manage 10 accounts with up to 2,000 followers and tweet 50 times a day. TimelineIn 2015, Lawrence Alexander disclosed a network of propaganda websites sharing the same Google Analytics identifier and domain registration details, allegedly run by Nikita Podgorny from Internet Research Agency. The websites were mostly meme repositories focused on attacking Ukraine, Euromaidan, Russian opposition and Western policies. Other websites from this cluster promoted president Putin and Russian nationalism, and spread alleged news from Syria presenting anti-Western and pro-Bashar al-Assad viewpoints. In August 2015, Russian researchers correlated Google search statistics of specific phrases with their geographic origin, observing increases in specific politically loaded phrases (such as "Poroshenko", "Maidan", "sanctions") starting from 2013 and originating from very small, peripheral locations in Russia, such as Olgino, which also happens to be the headquarters of the Internet Research Agency company. The Internet Research Agency also appears to be the primary sponsor of an anti-Western exhibition Material Evidence. Since 2015, Finnish reporter Jessikka Aro has inquired into web brigades and Russian trolls. In addition, Western journalists have referred to the phenomenon and have supported traditional media. In May 2019, it was reported that a study from the George Washington University found that Russian Twitter bots had tried to inflame the United States' anti-vaccination debate by posting opinions on both sides in 2018. In June 2019 a group of 12 editors introducing coordinated pro-government and anti-opposition bias was blocked on the Russian-language Wikipedia. In July 2019 two operatives of the Internet Research Agency were detained in Libya and charged with attempting to influence local elections. They were reportedly employees of Alexander Malkevich, manager of USA Really, a propaganda website. In 2020, the research firm Graphika published a report detailing one particular Russian disinformation group codenamed "Secondary Infektion" (alluding to 80's Operation Infektion) operating running since 2014. Over 6 years the group published over 2,500 items in seven languages and to over 300 platforms such as social media (Facebook, Twitter, YouTube, Reddit) and discussion forums. The group specialized in highly divisive topics regarding immigration, environment, politics, international relations and frequently used fake images presented as "leaked documents". Starting in February 2022, a special attempt was made to back the Russian war in Ukraine. Particular effort was made to target Facebook and YouTube. Russian invasion of UkraineIn May 2022, during the Russian invasion of Ukraine, the trolls allegedly hired by Internet Research Agency (IRA) had reportedly extended their foothold into TikTok, spreading misinformation on war events and attempting to question or sow doubt about the Ukraine war. Authentic-looking profiles had allegedly hundreds of thousands of followers. IRA was reported to be active across different platforms, including Instagram and Telegram. Opinion-influencing operations in other countriesOther countries and businesses have used paid Internet commenters to influence public opinion in other countries; some examples are below. (Note: most operations in the list below are smaller in scale than Russia's web brigades, and not all have involved the spread of misinformation).

|